library(keras)

install_keras()Training neural networks

Pre-lab activity

Please complete this activity before arriving at your section meeting. It should only take you about 15 minutes.

Python installation

We will use packages that interface to python from R to train neural networks in this lab. For this a current python installation is needed.

Select the download appropriate for your operating system here and follow installation instructions.

Keras and Tensorflow

Open RStudio and execute the following commands in the console. You should have already installed keras with other packages in the first lab; however, if library(keras) returns an error execute install.packages('keras') and then try again. This will install Tensorflow (for python) in a manner suitable for use in R, along with a few other packages.

To confirm the installation worked, try:

library(tensorflow)

tf$constant('Hello world')tf.Tensor(b'Hello world', shape=(), dtype=string)You may see a long message related to CUDA libraries in addition to the output shown above, but if you see this output at the end, your installation was successful.

If you did not see the expected output, try configuring a virtual environment for the installation explicitly as shown here.

If you are unable to troubleshoot after a short period of time, partner with a classmate for the lab activity and then ask for help from course staff.

Lab activity

Setup

Setup

Open a new script for this lab, copy-paste the code chunk below at the top of the script, and execute once.

# packages

library(tidyverse)

library(tidymodels)

library(tidytext)

library(keras)

library(tensorflow)

# data location

url <- 'https://raw.githubusercontent.com/pstat197/pstat197a/main/materials/labs/lab6-nn/data/claims-clean.csv'

# read in data

clean <- read_csv(url)Now partition the data into training and test sets.

Data partitioning

- Copy the code chunk below into your script but do not run the resulting lines.

- Coordinate with your neighbor: choose a new RNG seed and split proportion and input the same values in each of your scripts.

- Execute lines to partition the data.

# partition

set.seed(110325)

partitions <- clean %>%

mutate(text_clean = str_trim(text_clean)) %>%

filter(str_length(text_clean) > 5) %>%

initial_split(prop = 0.8)Now use the code chunk below to preprocess the training partition into a TF-IDF document term matrix (DTM), as before.

train_dtm <- training(partitions) %>%

unnest_tokens(output = 'token',

input = text_clean) %>%

group_by(.id, bclass) %>%

count(token) %>%

bind_tf_idf(term = token,

document = .id,

n = n) %>%

pivot_wider(id_cols = c(.id, bclass),

names_from = token,

values_from = tf_idf,

values_fill = 0) %>%

ungroup()Logistic regression as NN

To get a feel for keras, first we’ll fit a logistic regression model.

Recall that in class it was mentioned that standard statistical models can be described by neural networks with no hidden layers; along these lines, standard statistical models can also be fit using optimization routines for neural network training.

Use the code chunk below to get the TF-IDF values for the (alphabetically) first ten tokens. We’ll use these as predictors.

# extract first ten features

x_train <- train_dtm %>%

ungroup() %>%

select(-.id, -bclass) %>%

select(1:10) %>%

as.matrix()

# extract labels and coerce to binary

y_train <- train_dtm %>%

pull(bclass) %>%

factor() %>%

as.numeric() - 1This is purely for illustration purposes; any model using these variables should not perform well at all because ten tokens won’t contain much information about the classes.

To use keras, we’ll go through a few steps that are generally not done separately for fitting statistical models:

Model specification, i.e.,defining an architecture

Model configuration, i.e., specifying a loss function and fitting method

Model training, i.e., computing estimates for the parameters

Model specification

Model architecture is defined layer-by-layer. Keras has some preconfigured model types: for feedforward networks, use keras_model_sequential() .

# specify model type

model <- keras_model_sequential(input_shape = 10)The input_shape argument specifies the number of units for the input layer – in other words, the number of predictors.

At this stage, the model is just scaffolding:

summary(model)Model: "sequential"

________________________________________________________________________________

Layer (type) Output Shape Param #

================================================================================

================================================================================

Total params: 0 (0.00 Byte)

Trainable params: 0 (0.00 Byte)

Non-trainable params: 0 (0.00 Byte)

________________________________________________________________________________Now layers can be added one-by-one. For now we’ll just add an output layer – one unit. layer_dense will specify that the previous layer is fully-connected to the added layer.

# add output layer

model <- model %>% layer_dense(1) The model summary now shows the output layer.

summary(model)Model: "sequential"

________________________________________________________________________________

Layer (type) Output Shape Param #

================================================================================

dense (Dense) (None, 1) 11

================================================================================

Total params: 11 (44.00 Byte)

Trainable params: 11 (44.00 Byte)

Non-trainable params: 0 (0.00 Byte)

________________________________________________________________________________Lastly, we’ll add a sigmoid activation function:

model <- model %>%

layer_activation(activation = 'sigmoid')Since there is no hidden layer, our model is

\[ \begin{aligned} \mathbb{E}Y &= \frac{1}{1 + \exp\{-T\}} \\ T &= X\beta \end{aligned} \]

Notice that this is the logistic regression model (without the distributional assumption).

Model configuration

Configuring a keras model consists in equipping it with a loss and an optimization method. Optionally, metrics that you’d like computed at each training epoch can be included.

model %>% compile(

loss = 'binary_crossentropy',

optimizer = tf$keras$optimizers$legacy$SGD(),

metrics = 'binary_accuracy'

)This says that to train the model, we’ll minimize binary cross-entropy on the training data using stochastic gradient descent.

To train for 10 epochs, pipe the model into fit() and supply the training data. Note that the training data must be numeric, not a data frame.

history <- model %>%

fit(x = x_train,

y = y_train,

epochs = 10)Epoch 1/10

14/14 - 8s - loss: 0.6932 - binary_accuracy: 0.4796 - 8s/epoch - 589ms/step

Epoch 2/10

14/14 - 0s - loss: 0.6932 - binary_accuracy: 0.4661 - 42ms/epoch - 3ms/step

Epoch 3/10

14/14 - 0s - loss: 0.6932 - binary_accuracy: 0.4864 - 11ms/epoch - 807us/step

Epoch 4/10

14/14 - 0s - loss: 0.6932 - binary_accuracy: 0.4548 - 10ms/epoch - 709us/step

Epoch 5/10

14/14 - 0s - loss: 0.6932 - binary_accuracy: 0.4910 - 10ms/epoch - 701us/step

Epoch 6/10

14/14 - 0s - loss: 0.6932 - binary_accuracy: 0.4638 - 10ms/epoch - 692us/step

Epoch 7/10

14/14 - 0s - loss: 0.6932 - binary_accuracy: 0.4819 - 9ms/epoch - 659us/step

Epoch 8/10

14/14 - 0s - loss: 0.6932 - binary_accuracy: 0.5023 - 9ms/epoch - 609us/step

Epoch 9/10

14/14 - 0s - loss: 0.6931 - binary_accuracy: 0.4977 - 8ms/epoch - 598us/step

Epoch 10/10

14/14 - 0s - loss: 0.6931 - binary_accuracy: 0.5045 - 8ms/epoch - 607us/stepThe following commands will retrieve weights, evaluated loss ans specified metrics, and predictions.

# retrieve weights

keras::get_weights(model)[[1]]

[,1]

[1,] -0.14723948

[2,] 0.48718530

[3,] 0.01335012

[4,] 0.41890398

[5,] 0.03589990

[6,] 0.59800798

[7,] 0.59559381

[8,] 0.18664885

[9,] 0.73079646

[10,] -0.41445050

[[2]]

[1] -0.01270032# evaluate on specified data

evaluate(model, x_train, y_train)14/14 - 0s - loss: 0.6931 - binary_accuracy: 0.5045 - 296ms/epoch - 21ms/step loss binary_accuracy

0.6930981 0.5045249 # compute predictions

model(x_train) %>% head()tf.Tensor(

[[0.5039001 ]

[0.49685133]

[0.49650043]

[0.49835187]

[0.49853253]

[0.49682495]], shape=(6, 1), dtype=float32)Check your understanding

Discuss with your neighbor:

- How many parameters does this model have?

- Do the number of parameters match your expectations?

- Why will the parameter estimates not match the result of

glm()? - Would further training epochs improve the performance?

Single-layer network

Now that you have a sense of the basic keras syntax and model specification/configuration/training procedure, we can train a proper network with one (or more!) hidden layers.

First coerce the DTM into the format needed for training.

# store full DTM as a matrix

x_train <- train_dtm %>%

select(-bclass, -.id) %>%

as.matrix()Now configure a model with one hidden layer having 10 units. Notice that the architecture can be defined by one sequence of pipes rather than stepwise as before.

model <- keras_model_sequential(input_shape = ncol(x_train)) %>%

layer_dense(10) %>%

layer_dense(1) %>%

layer_activation(activation = 'sigmoid')

summary(model)Model: "sequential_1"

________________________________________________________________________________

Layer (type) Output Shape Param #

================================================================================

dense_2 (Dense) (None, 10) 167410

dense_1 (Dense) (None, 1) 11

activation_1 (Activation) (None, 1) 0

================================================================================

Total params: 167421 (653.99 KB)

Trainable params: 167421 (653.99 KB)

Non-trainable params: 0 (0.00 Byte)

________________________________________________________________________________Notice the number of parameters. (Does this match your expectation?) Configure the model:

model %>%

compile(

loss = 'binary_crossentropy',

optimizer = tf$keras$optimizers$legacy$SGD(),

metrics = 'binary_accuracy'

)And finally, train:

history <- model %>%

fit(x = x_train,

y = y_train,

epochs = 50)Epoch 1/50

14/14 - 0s - loss: 0.6931 - binary_accuracy: 0.5068 - 410ms/epoch - 29ms/step

Epoch 2/50

14/14 - 0s - loss: 0.6929 - binary_accuracy: 0.5407 - 19ms/epoch - 1ms/step

Epoch 3/50

14/14 - 0s - loss: 0.6927 - binary_accuracy: 0.5023 - 18ms/epoch - 1ms/step

Epoch 4/50

14/14 - 0s - loss: 0.6926 - binary_accuracy: 0.5362 - 19ms/epoch - 1ms/step

Epoch 5/50

14/14 - 0s - loss: 0.6925 - binary_accuracy: 0.5181 - 18ms/epoch - 1ms/step

Epoch 6/50

14/14 - 0s - loss: 0.6925 - binary_accuracy: 0.5158 - 18ms/epoch - 1ms/step

Epoch 7/50

14/14 - 0s - loss: 0.6923 - binary_accuracy: 0.5204 - 17ms/epoch - 1ms/step

Epoch 8/50

14/14 - 0s - loss: 0.6922 - binary_accuracy: 0.5249 - 16ms/epoch - 1ms/step

Epoch 9/50

14/14 - 0s - loss: 0.6921 - binary_accuracy: 0.5204 - 16ms/epoch - 1ms/step

Epoch 10/50

14/14 - 0s - loss: 0.6920 - binary_accuracy: 0.5226 - 16ms/epoch - 1ms/step

Epoch 11/50

14/14 - 0s - loss: 0.6918 - binary_accuracy: 0.5226 - 17ms/epoch - 1ms/step

Epoch 12/50

14/14 - 0s - loss: 0.6918 - binary_accuracy: 0.5271 - 16ms/epoch - 1ms/step

Epoch 13/50

14/14 - 0s - loss: 0.6917 - binary_accuracy: 0.5226 - 16ms/epoch - 1ms/step

Epoch 14/50

14/14 - 0s - loss: 0.6917 - binary_accuracy: 0.5226 - 16ms/epoch - 1ms/step

Epoch 15/50

14/14 - 0s - loss: 0.6915 - binary_accuracy: 0.5271 - 16ms/epoch - 1ms/step

Epoch 16/50

14/14 - 0s - loss: 0.6914 - binary_accuracy: 0.5271 - 16ms/epoch - 1ms/step

Epoch 17/50

14/14 - 0s - loss: 0.6913 - binary_accuracy: 0.5294 - 16ms/epoch - 1ms/step

Epoch 18/50

14/14 - 0s - loss: 0.6912 - binary_accuracy: 0.5271 - 16ms/epoch - 1ms/step

Epoch 19/50

14/14 - 0s - loss: 0.6911 - binary_accuracy: 0.5294 - 16ms/epoch - 1ms/step

Epoch 20/50

14/14 - 0s - loss: 0.6910 - binary_accuracy: 0.5294 - 16ms/epoch - 1ms/step

Epoch 21/50

14/14 - 0s - loss: 0.6909 - binary_accuracy: 0.5249 - 16ms/epoch - 1ms/step

Epoch 22/50

14/14 - 0s - loss: 0.6909 - binary_accuracy: 0.5271 - 15ms/epoch - 1ms/step

Epoch 23/50

14/14 - 0s - loss: 0.6907 - binary_accuracy: 0.5317 - 16ms/epoch - 1ms/step

Epoch 24/50

14/14 - 0s - loss: 0.6906 - binary_accuracy: 0.5294 - 15ms/epoch - 1ms/step

Epoch 25/50

14/14 - 0s - loss: 0.6906 - binary_accuracy: 0.5362 - 16ms/epoch - 1ms/step

Epoch 26/50

14/14 - 0s - loss: 0.6904 - binary_accuracy: 0.5249 - 15ms/epoch - 1ms/step

Epoch 27/50

14/14 - 0s - loss: 0.6903 - binary_accuracy: 0.5339 - 14ms/epoch - 1ms/step

Epoch 28/50

14/14 - 0s - loss: 0.6902 - binary_accuracy: 0.5271 - 15ms/epoch - 1ms/step

Epoch 29/50

14/14 - 0s - loss: 0.6902 - binary_accuracy: 0.5385 - 16ms/epoch - 1ms/step

Epoch 30/50

14/14 - 0s - loss: 0.6901 - binary_accuracy: 0.5339 - 15ms/epoch - 1ms/step

Epoch 31/50

14/14 - 0s - loss: 0.6900 - binary_accuracy: 0.5339 - 15ms/epoch - 1ms/step

Epoch 32/50

14/14 - 0s - loss: 0.6900 - binary_accuracy: 0.5385 - 15ms/epoch - 1ms/step

Epoch 33/50

14/14 - 0s - loss: 0.6898 - binary_accuracy: 0.5362 - 15ms/epoch - 1ms/step

Epoch 34/50

14/14 - 0s - loss: 0.6897 - binary_accuracy: 0.5317 - 16ms/epoch - 1ms/step

Epoch 35/50

14/14 - 0s - loss: 0.6896 - binary_accuracy: 0.5362 - 16ms/epoch - 1ms/step

Epoch 36/50

14/14 - 0s - loss: 0.6895 - binary_accuracy: 0.5339 - 15ms/epoch - 1ms/step

Epoch 37/50

14/14 - 0s - loss: 0.6893 - binary_accuracy: 0.5385 - 15ms/epoch - 1ms/step

Epoch 38/50

14/14 - 0s - loss: 0.6893 - binary_accuracy: 0.5385 - 15ms/epoch - 1ms/step

Epoch 39/50

14/14 - 0s - loss: 0.6892 - binary_accuracy: 0.5362 - 15ms/epoch - 1ms/step

Epoch 40/50

14/14 - 0s - loss: 0.6891 - binary_accuracy: 0.5407 - 15ms/epoch - 1ms/step

Epoch 41/50

14/14 - 0s - loss: 0.6890 - binary_accuracy: 0.5407 - 16ms/epoch - 1ms/step

Epoch 42/50

14/14 - 0s - loss: 0.6889 - binary_accuracy: 0.5407 - 15ms/epoch - 1ms/step

Epoch 43/50

14/14 - 0s - loss: 0.6888 - binary_accuracy: 0.5385 - 15ms/epoch - 1ms/step

Epoch 44/50

14/14 - 0s - loss: 0.6887 - binary_accuracy: 0.5407 - 15ms/epoch - 1ms/step

Epoch 45/50

14/14 - 0s - loss: 0.6886 - binary_accuracy: 0.5407 - 15ms/epoch - 1ms/step

Epoch 46/50

14/14 - 0s - loss: 0.6886 - binary_accuracy: 0.5430 - 15ms/epoch - 1ms/step

Epoch 47/50

14/14 - 0s - loss: 0.6884 - binary_accuracy: 0.5407 - 16ms/epoch - 1ms/step

Epoch 48/50

14/14 - 0s - loss: 0.6883 - binary_accuracy: 0.5407 - 15ms/epoch - 1ms/step

Epoch 49/50

14/14 - 0s - loss: 0.6883 - binary_accuracy: 0.5407 - 16ms/epoch - 1ms/step

Epoch 50/50

14/14 - 0s - loss: 0.6883 - binary_accuracy: 0.5430 - 15ms/epoch - 1ms/stepplot(history)

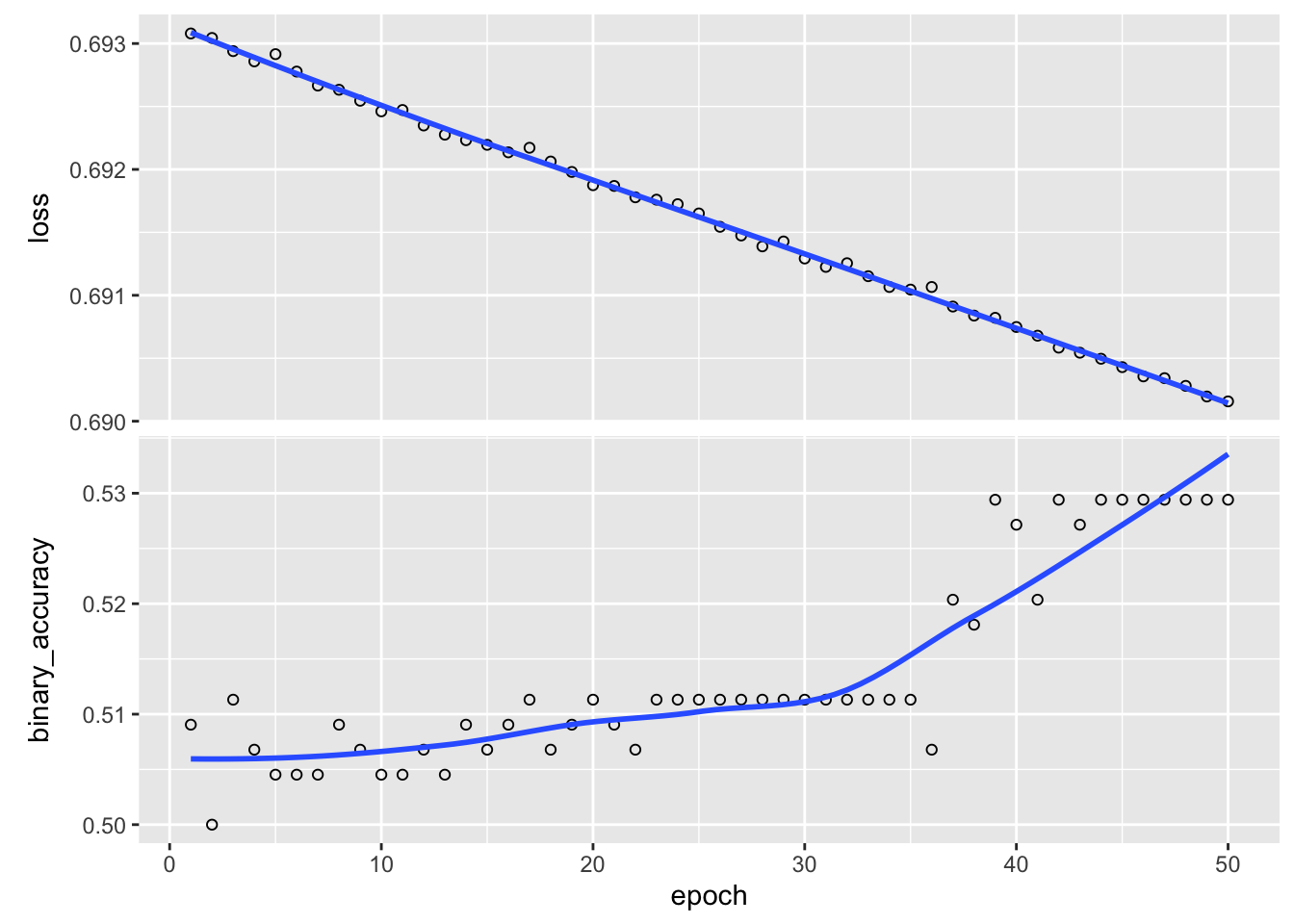

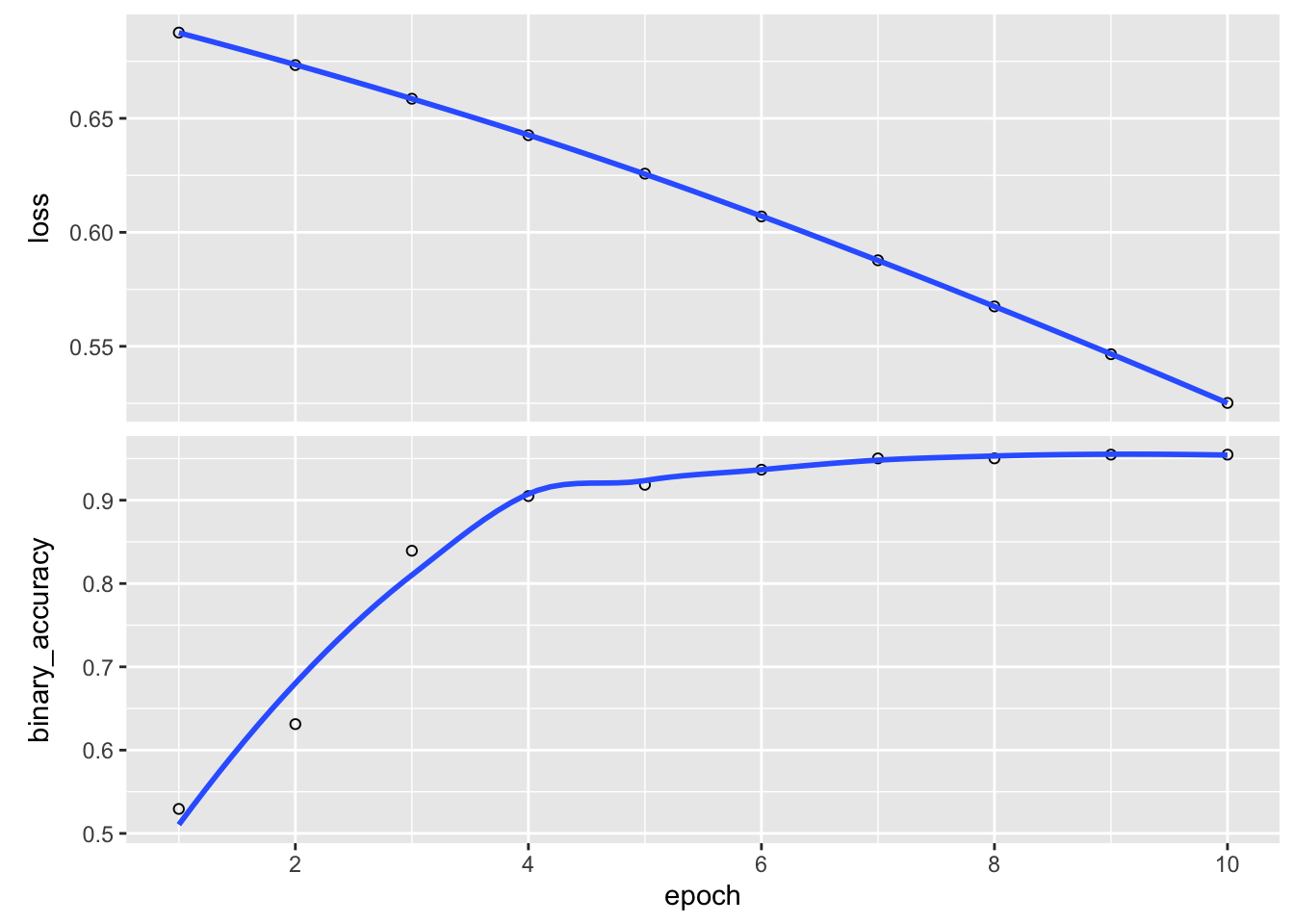

Notice that even after 50 epochs the results are still quite poor. As mentioned in class, the choice of optimization method can have a big impact on the quality of estimates. If we train the model instead using Adam, good accuracy is achieved after just a few epochs:

# change the optimizer

model %>%

compile(

loss = 'binary_crossentropy',

optimizer = 'adam',

metrics = 'binary_accuracy'

)

# re-train

history <- model %>%

fit(x = x_train,

y = y_train,

epochs = 10)Epoch 1/10

14/14 - 2s - loss: 0.6842 - binary_accuracy: 0.6086 - 2s/epoch - 143ms/step

Epoch 2/10

14/14 - 0s - loss: 0.6638 - binary_accuracy: 0.8575 - 19ms/epoch - 1ms/step

Epoch 3/10

14/14 - 0s - loss: 0.6441 - binary_accuracy: 0.9208 - 19ms/epoch - 1ms/step

Epoch 4/10

14/14 - 0s - loss: 0.6237 - binary_accuracy: 0.9253 - 18ms/epoch - 1ms/step

Epoch 5/10

14/14 - 0s - loss: 0.6024 - binary_accuracy: 0.9321 - 19ms/epoch - 1ms/step

Epoch 6/10

14/14 - 0s - loss: 0.5807 - binary_accuracy: 0.9457 - 18ms/epoch - 1ms/step

Epoch 7/10

14/14 - 0s - loss: 0.5581 - binary_accuracy: 0.9548 - 17ms/epoch - 1ms/step

Epoch 8/10

14/14 - 0s - loss: 0.5358 - binary_accuracy: 0.9548 - 17ms/epoch - 1ms/step

Epoch 9/10

14/14 - 0s - loss: 0.5134 - binary_accuracy: 0.9525 - 17ms/epoch - 1ms/step

Epoch 10/10

14/14 - 0s - loss: 0.4907 - binary_accuracy: 0.9548 - 17ms/epoch - 1ms/stepplot(history)

Validation data

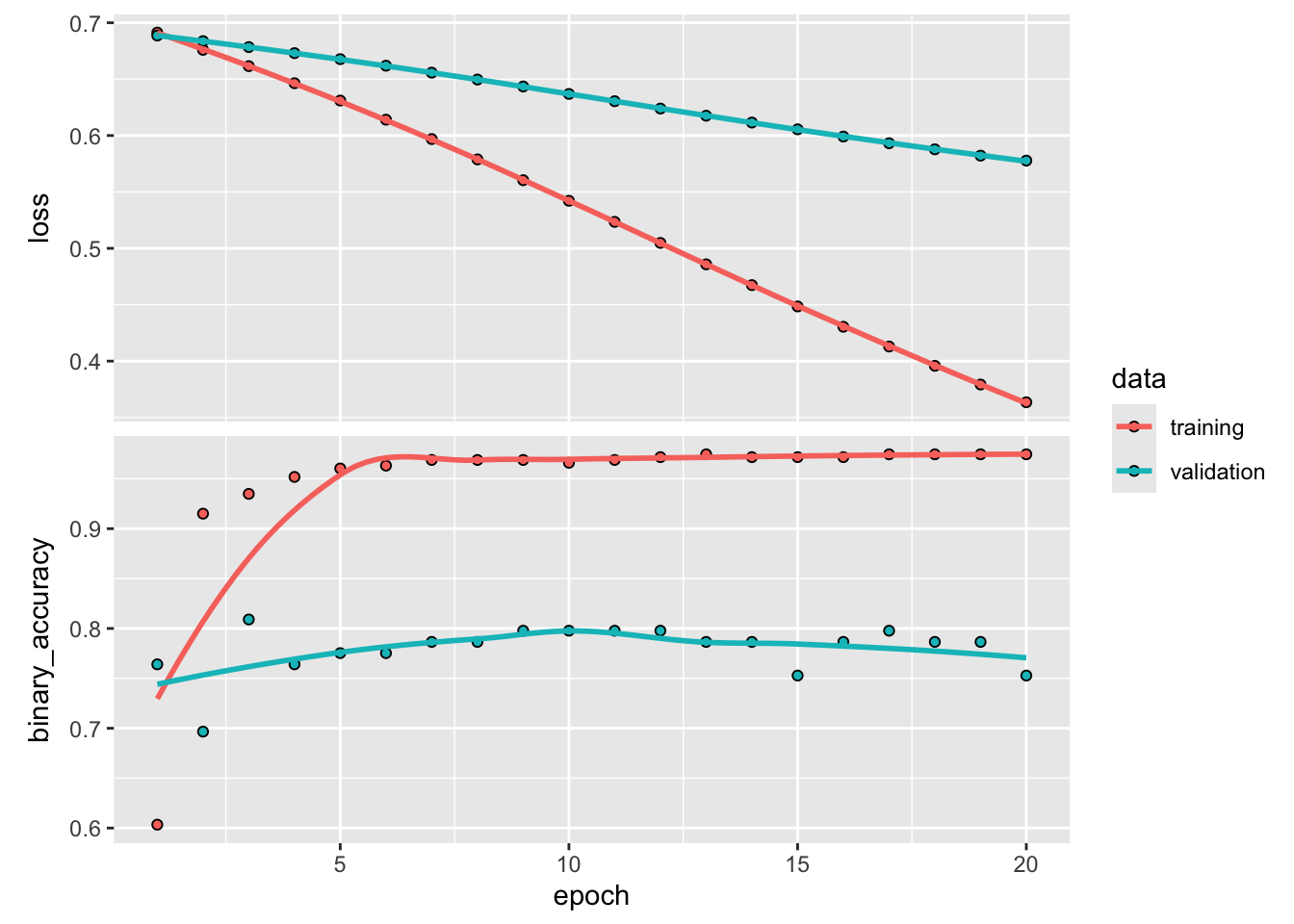

Often training data are sub-partitioned into training and ‘validation’ sets. The validation set can be used to provide a soft estimate of accuracy during training.

This provides one strategy to avoid overfitting – the practitioner should only train as long as validation accuracy continues to increase.

Keras makes that easy by supplying an extra argument to fit(). The code chunk below trains for longer and uses 20% of the training data for validation. You should see that the training accuracy gets quite high, but the validation accuracy plateaus around 80%.

# redefine model

model <- keras_model_sequential(input_shape = ncol(x_train)) %>%

layer_dense(10) %>%

layer_dense(1) %>%

layer_activation(activation = 'sigmoid')

model %>%

compile(

loss = 'binary_crossentropy',

optimizer = 'adam',

metrics = 'binary_accuracy'

)

# train with validation split

history <- model %>%

fit(x = x_train,

y = y_train,

epochs = 20,

validation_split = 0.2)Epoch 1/20

12/12 - 1s - loss: 0.6917 - binary_accuracy: 0.6317 - val_loss: 0.6898 - val_binary_accuracy: 0.7753 - 714ms/epoch - 59ms/step

Epoch 2/20

12/12 - 0s - loss: 0.6798 - binary_accuracy: 0.9405 - val_loss: 0.6857 - val_binary_accuracy: 0.7978 - 50ms/epoch - 4ms/step

Epoch 3/20

12/12 - 0s - loss: 0.6678 - binary_accuracy: 0.9575 - val_loss: 0.6814 - val_binary_accuracy: 0.7865 - 32ms/epoch - 3ms/step

Epoch 4/20

12/12 - 0s - loss: 0.6551 - binary_accuracy: 0.9603 - val_loss: 0.6768 - val_binary_accuracy: 0.8090 - 32ms/epoch - 3ms/step

Epoch 5/20

12/12 - 0s - loss: 0.6416 - binary_accuracy: 0.9632 - val_loss: 0.6718 - val_binary_accuracy: 0.7753 - 31ms/epoch - 3ms/step

Epoch 6/20

12/12 - 0s - loss: 0.6276 - binary_accuracy: 0.9632 - val_loss: 0.6668 - val_binary_accuracy: 0.7753 - 32ms/epoch - 3ms/step

Epoch 7/20

12/12 - 0s - loss: 0.6122 - binary_accuracy: 0.9632 - val_loss: 0.6616 - val_binary_accuracy: 0.7753 - 33ms/epoch - 3ms/step

Epoch 8/20

12/12 - 0s - loss: 0.5965 - binary_accuracy: 0.9603 - val_loss: 0.6564 - val_binary_accuracy: 0.7865 - 32ms/epoch - 3ms/step

Epoch 9/20

12/12 - 0s - loss: 0.5802 - binary_accuracy: 0.9603 - val_loss: 0.6507 - val_binary_accuracy: 0.7978 - 31ms/epoch - 3ms/step

Epoch 10/20

12/12 - 0s - loss: 0.5633 - binary_accuracy: 0.9632 - val_loss: 0.6446 - val_binary_accuracy: 0.7978 - 30ms/epoch - 3ms/step

Epoch 11/20

12/12 - 0s - loss: 0.5456 - binary_accuracy: 0.9632 - val_loss: 0.6383 - val_binary_accuracy: 0.7978 - 31ms/epoch - 3ms/step

Epoch 12/20

12/12 - 0s - loss: 0.5278 - binary_accuracy: 0.9632 - val_loss: 0.6322 - val_binary_accuracy: 0.7978 - 30ms/epoch - 3ms/step

Epoch 13/20

12/12 - 0s - loss: 0.5096 - binary_accuracy: 0.9632 - val_loss: 0.6255 - val_binary_accuracy: 0.7865 - 32ms/epoch - 3ms/step

Epoch 14/20

12/12 - 0s - loss: 0.4907 - binary_accuracy: 0.9632 - val_loss: 0.6191 - val_binary_accuracy: 0.7753 - 31ms/epoch - 3ms/step

Epoch 15/20

12/12 - 0s - loss: 0.4732 - binary_accuracy: 0.9660 - val_loss: 0.6136 - val_binary_accuracy: 0.7978 - 30ms/epoch - 3ms/step

Epoch 16/20

12/12 - 0s - loss: 0.4552 - binary_accuracy: 0.9660 - val_loss: 0.6080 - val_binary_accuracy: 0.7978 - 34ms/epoch - 3ms/step

Epoch 17/20

12/12 - 0s - loss: 0.4377 - binary_accuracy: 0.9660 - val_loss: 0.6022 - val_binary_accuracy: 0.7978 - 31ms/epoch - 3ms/step

Epoch 18/20

12/12 - 0s - loss: 0.4207 - binary_accuracy: 0.9688 - val_loss: 0.5967 - val_binary_accuracy: 0.7753 - 32ms/epoch - 3ms/step

Epoch 19/20

12/12 - 0s - loss: 0.4036 - binary_accuracy: 0.9660 - val_loss: 0.5914 - val_binary_accuracy: 0.7753 - 32ms/epoch - 3ms/step

Epoch 20/20

12/12 - 0s - loss: 0.3873 - binary_accuracy: 0.9660 - val_loss: 0.5863 - val_binary_accuracy: 0.7865 - 31ms/epoch - 3ms/stepplot(history)

Compute predictions from your trained network on the test partition. Estimate the predictive accuracy. Is it any better than what we managed with principal component regression in class?